Research for Autodesk Virtual Agent

UX RESEACH, PROTOTYPINGOverview

I led the initial research project team for one of Autodesk’s first forays into conversational AI.

This project tested the viability of an automated help service at Autodesk from the customer’s perspective. We researched how customers felt about virtual assistants, what abilities it should have, and how it should communicate.

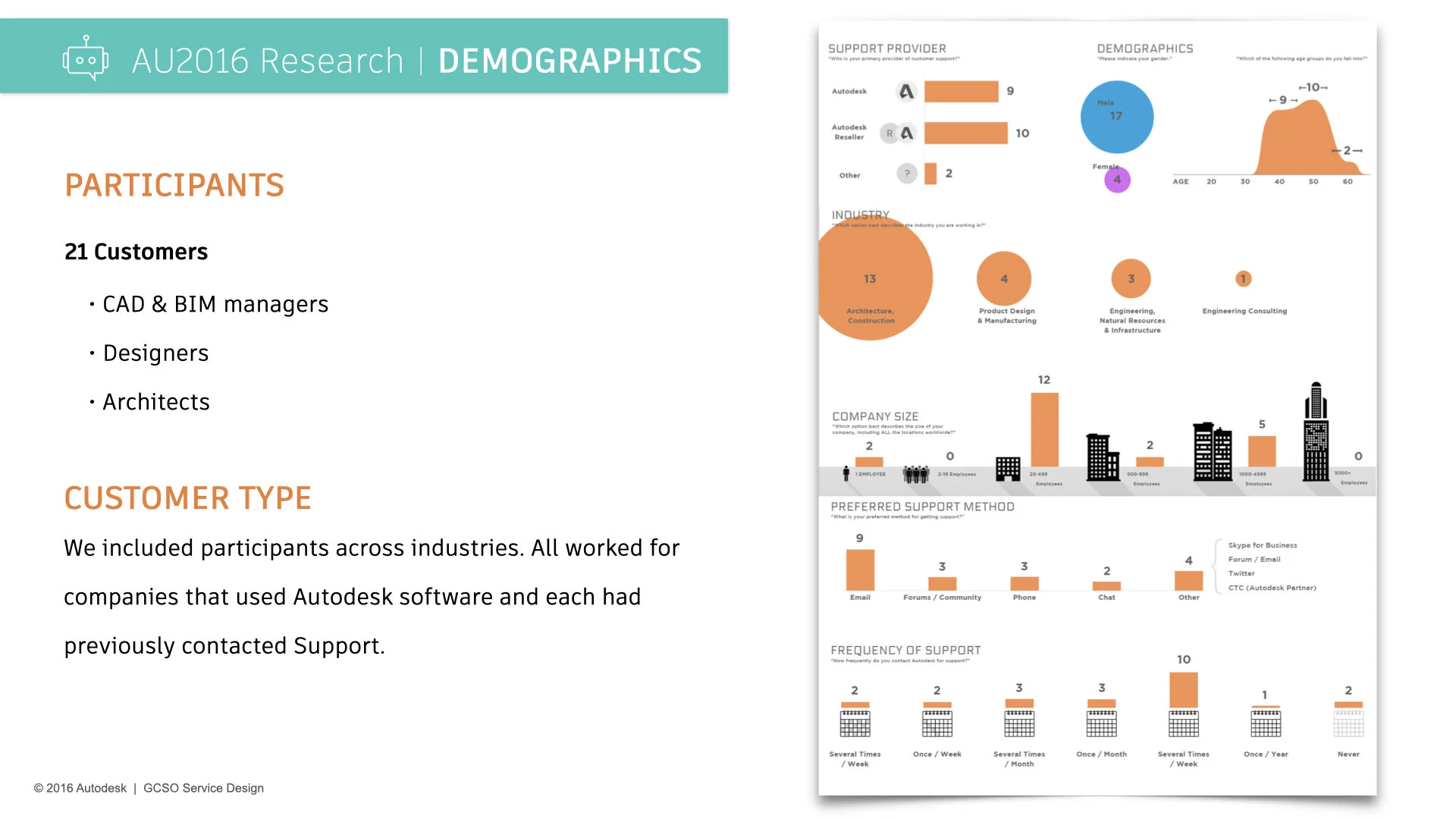

Our project culminated in live user testing at Autodesk University 2016. We used three different research methods, and held in-person interviews with 21 customers.

This research was the first step in introducing conversational AI at Autodesk, and led to the creation of AVA, Autodesk Virtual Assistant.

01 About This Project

In addition to being my first serious introduction into machine learning, this was my first time working with and coordinating five other people as a project lead.

Autodesk offers multiple forms of support for our customers, but none of them are instantaneous. For the majority of customers, you cannot pick up the phone and immediately get someone on the other end. You have to wait for an agent to get back to you. For simple questions or issues, it may be faster to use an automated system than a live person. This project explored the feasibility of implementing a chatbot on autodesk.com to help with repetitive tasks and common questions.

In 2016 chatbots were not as common as they are today. I led research and testing around customer engagement, and what our best practices should be moving forward. While Autodesk’s chatbot has since gone through several names and iterations, our initial research contributed to the development of what is now known as AVA, Autodesk’s Virtual Agent.

We tested different conversation styles, monitored chat interactions, and evaluated backend capabilities. All of it contributing to the answer of this overarching question:

What would make customers comfortable using an automated bot to solve their problem instead of a live person?

Problem Statement

Customers with Tier 1 issues are unsure of where to go for help, and often end up in the forums or on AKN. Customers value quality of help and speed of response, as well as specialized responses to their questions and issues. Can we use automation to help these customers while reducing both case loads and wait times?

Project Details

Going through the research process, we used surveys, prototypes, and testing methodologies to find the optimal personality and interaction model to help customers and ease frustrations. It was important to find what an AI would be good at and where it should be deployed to best serve customers.

We compiled our findings and made recommendations that would support the specific business goal of deploying a chat bot to assist customers with frequent known issues in a helpful manner.

Project Title: Chatbot Automation Research

Time Frame: September 2016 - February 2017

Skills Used: Research, Heuristics, Interactive Prototyping, User Testing

My Role

Project Lead

Led investigative efforts of a cross-functional team of 6 people including other researchers and designers, a content writer, a data scientist, and a developer.

Created the Research Plan

Chose research methods, created surveys, prototype, assets and plan for moderated testing at Autodesk University 2016.

Evaluation & Prototyping

Heuristic analysis of existing chatbots. Used IBM Watson to build our prototype conversational AI that was deployed to Slack by our developer.

Analysis & Insights

Compiled research, testing, and analysis into a set of best practices for chat bots at Autodesk.

02 Process

Where We Started

When we began this project, a basic chatbot named Otto had already been introduced to our Sales Partners. Otto was built to help guide users through processing transactions, so its conversation skills were very limited. One of our business goals was to move from this chatbot used by a few hundred people in a very specific set of circumstances, to a bot with potentially hundreds of use cases and thousands of users. Despite these differences, Otto was a good place for us to start the research process and begin understanding the technology.

We conducted a heuristic evaluation of Otto using the standards developed by the Nielsen Norman Group and focusing on the conversational nature of a chatbot. A quick summary of our findings:

Positives:

Otto accomplishes its main task of providing activation codes instantaneously and it was easy to switch to in-person help.

Drawbacks:

Confusing user flows, overly verbose and disjointed instructions, no marking required fields, or indicators that content to the right can change.

Takeaways:

Otto was very perfunctory, with the main focus on forms and help articles rather than chat flows or active dialogue. There were few takeaways that we could bring to designing a conversational bot, but it did give us ideas about the relationship between chatbots and transactions.

Partial screenshot of Otto, deployed in Autodesk Partner Center

Where We Ended

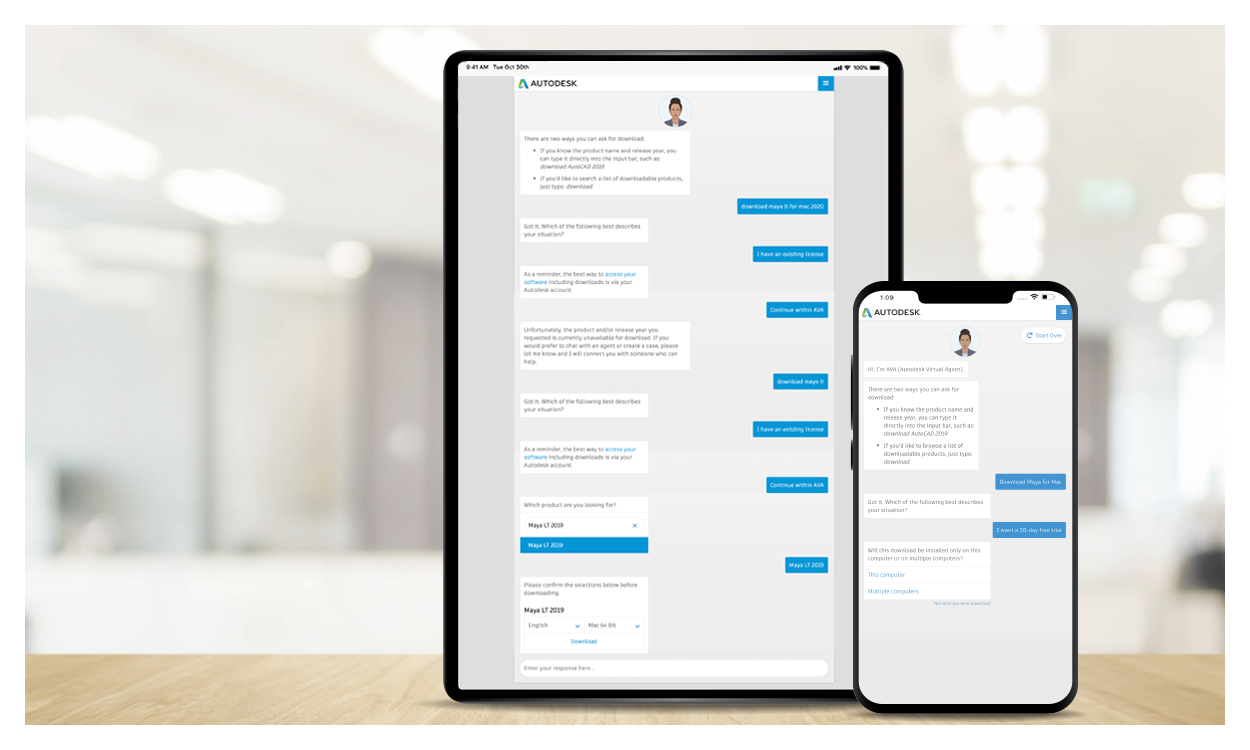

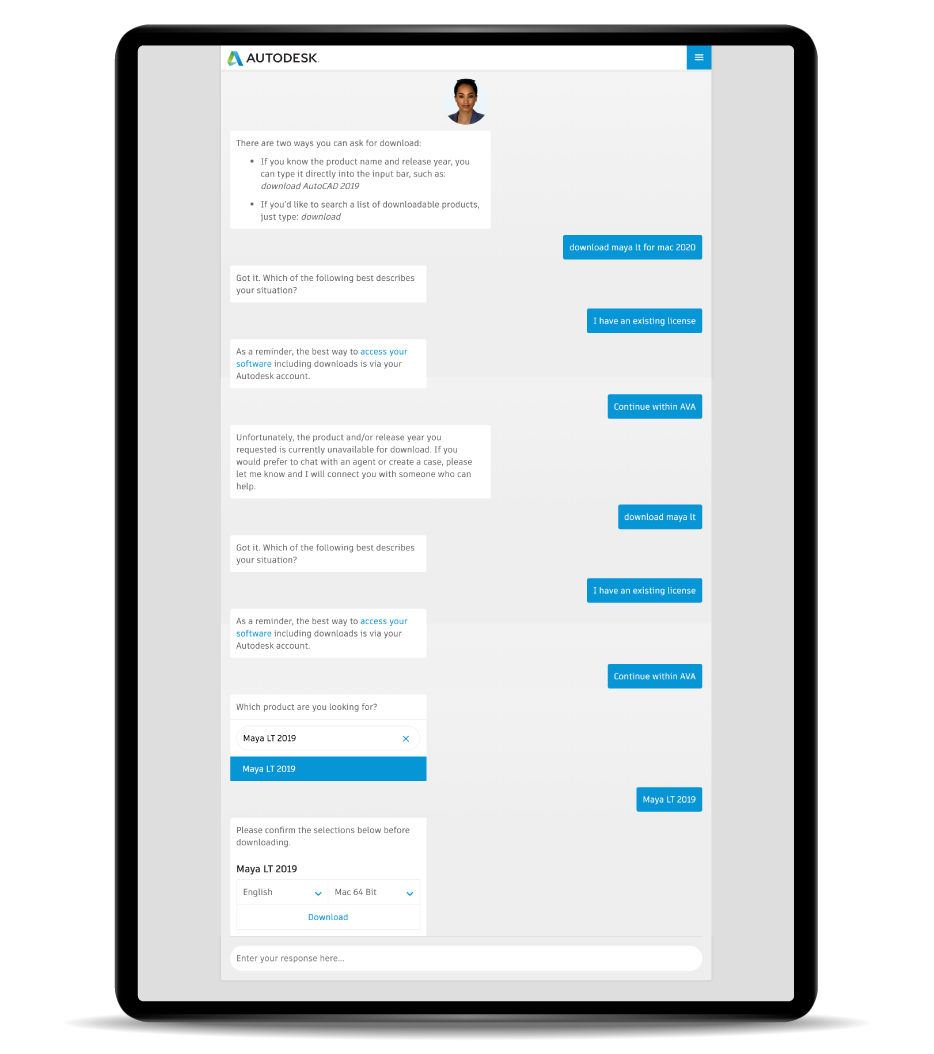

Using the insights and recommendations we delivered, our Help and Learning team created AVA Autodesk Virtual Assistant which has gone through several iterations since its initial introduction 3 years ago.

Autodesk’s goal is to become a highly customer-centric, digital technology company. Our recommendations helped move the company further towards this goal:

Chatbots are available 24/7 and AVA was programmed to help with the top customer issues, like downloading and installing products. This in turn reduced case loads and wait times.

AVA is efficient, solving 86% of customer issues in under 4 minutes and connects you to a human if it is unable to help.

AVA is professional and helpful. It lists its capabilities upfront so user don’t waste their time.

Users are sometime given specific options for responding to AVA. Conversations are faster by eliminating typing, and it guides the conversation which minimizes error responses.

AVA in 2017, 2019, and 2020

How We Got There

Using Otto as our starting point, the next step was to look at more examples and formulate our overall research plan.

03 Research

Key Challenges

Based on our heuristic evaluation, we had a fairly good idea of what questions still needed answering. Otto’s capabilities and interface demonstrated the transactional potential an AI has, but the conversational aspect was completely missing. We started by identifying the three big problems we needed to solve:

How much of the AI do we expose to the customer? How do we set expectations of what it can do for them?

What should an Autodesk bot sound like and how should it interact with customers? What should we avoid?

How complex does the bot need to be? How many subjects should it know and how long should the conversation last?

Industry Benchmarking

I started by analyzing 27 different bots across industries with varying degrees of sophistication and capabilities. My interactions often included dead ends, repeated questions, and incorrect answers. None of the bots were perfect, not even Miss Piggy. There was also a large difference between cute avatar chatbots and customer service chatbots.

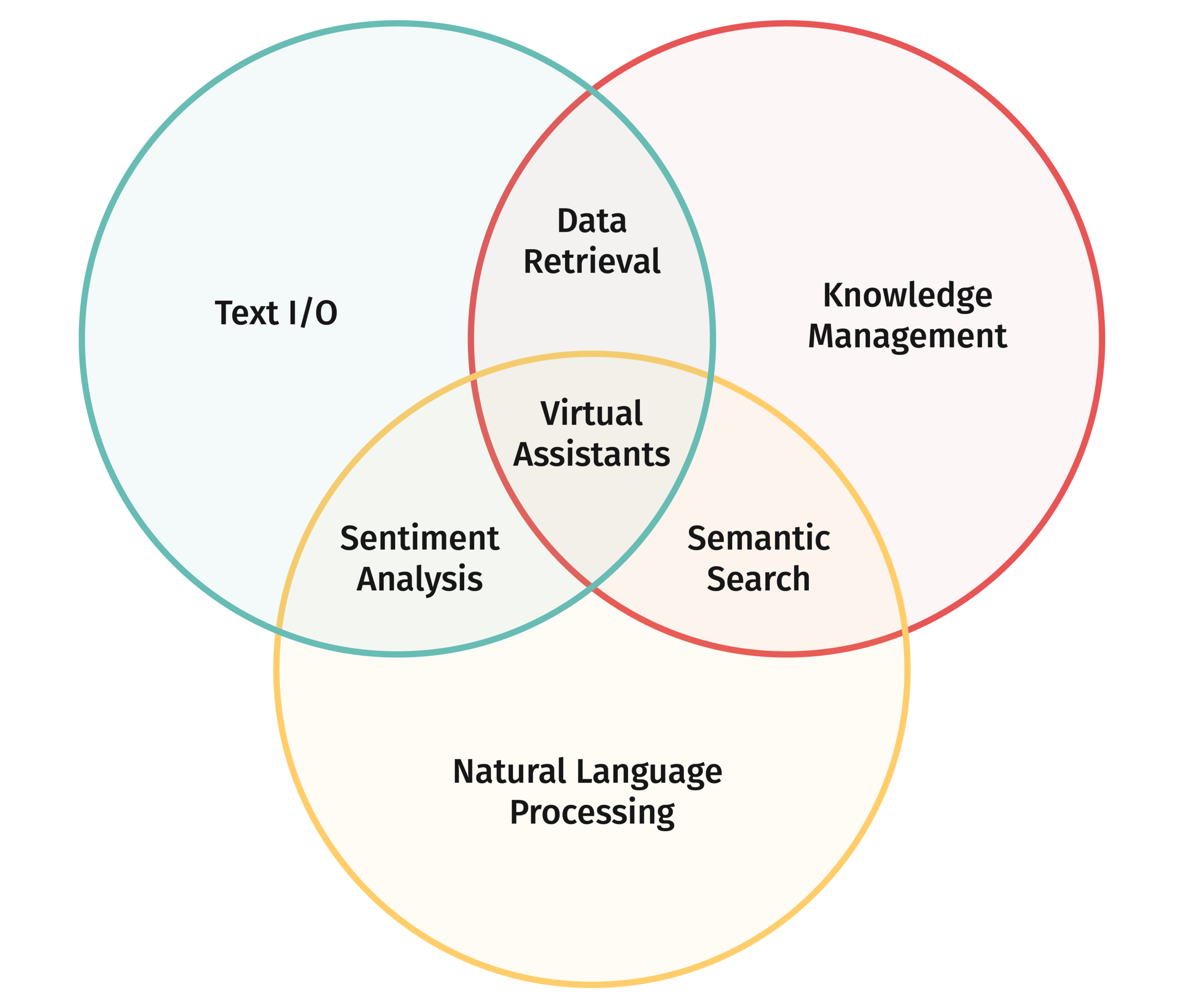

A lot of factors make a conversational AI effective or ineffective, but overall success depended on the bot’s main function. They generally fell into three categories— Conversational, Helpful, and Knowledgable.

Conversational Bots

Chatbots that were purely for marketing or entertainment purposes were helpful for understanding different personalities and tone of voice. The repertoire of conversation topics was always limited. I could have a coherent dialogue up to a point, but once you progress past the initial topic you hit a wall where the bot’s responses stop making sense or it no longer understands what you are saying. These basic conversational bots have a specific script to follow and if you deviate from the programmed path you end up unhappy, confused or frustrated.

Chatbots require some combination of three capabilities to be effective. These combined with datasets and backend systems determine a bot’s skill set.

Helpful Bots

Many of the chatbots I tested were transactional, like Otto. They can help you complete specific tasks, often within the same UI rather than redirecting you. On one hand, chatbots like these can be bandaids on bigger usability issues. On the other, it is convenient to use one interface without further hunting.

For simple tasks, like resetting a password, these bots were efficient and helpful. However, when presented with a more complex task, like filling out a form. It could become a tedious back-and-forth. This approach can seem endless, mainly because there was no indication of how many more questions the bot would ask.

Knowledgable Bots

Chatbots that are there to provide or locate information fall under this category. Oftentimes they can feel like an interactive search engine or personality-laden Google, but they can be very useful for things like providing personal account information (usually requires answering security questions first).

If a question has a well documented or long answer, each bot would provide the information in different ways. Some would open the page for you, some displayed the information within the window, and others simply provided the correct link for you to click.

Customer Survey

From here I made some assumptions about what skills and attributes we should focus on to create the largest value to customers. Personality and demeanor were important, and so were the tasks a bot could accomplish. Things like the bot name and avatar seemed to have little impact apart from influencing user expectations based on gender.

To validate our hypothesis, we deployed a customer survey. This allowed us to gauge customer’s familiarity with chatbots, discover where they need assistance, and determine which conversational styles they prefer. We had a sample set of 49 responses from customers who contacted Support in the last 30 days.

Results

Survey results indicated that overall sentiment towards chatbots is poor. None of these customers had interacted with an Autodesk chatbot, so this opinion stemmed from past experiences. The lean towards negative sentiment wan’t completely surprising given how many chatbots left us dissatisfied during the competitive audit.

Really the only positive about a chat bot is that they are always available, unlike waiting for a chat session with a person. Generally, they do not have all of the information that is required and I end up wasting time dealing with the chat bot and have to repeat everything to a person later.

Only half of respondents knew what a chatbot was, so there was still opportunity to make a good first impression. Based on responses, there was a definite preference for a task-oriented bot versus one that answered questions. We also received enough feedback on tone of voice to write dialogue for further testing.

User Stories

We ideated on possible use cases for an Autodesk Chatbot and mapped out different user journeys to show the different ways a chatbot could assist customers. There was a wide variety of APIs we could give a chatbot that would influence its skillset.

Based on the results of our customer survey, we focused on generating use cases about answering product questions, installation and licensing, and activating products.

Journey map for a home use license

Exploring Conversation Styles

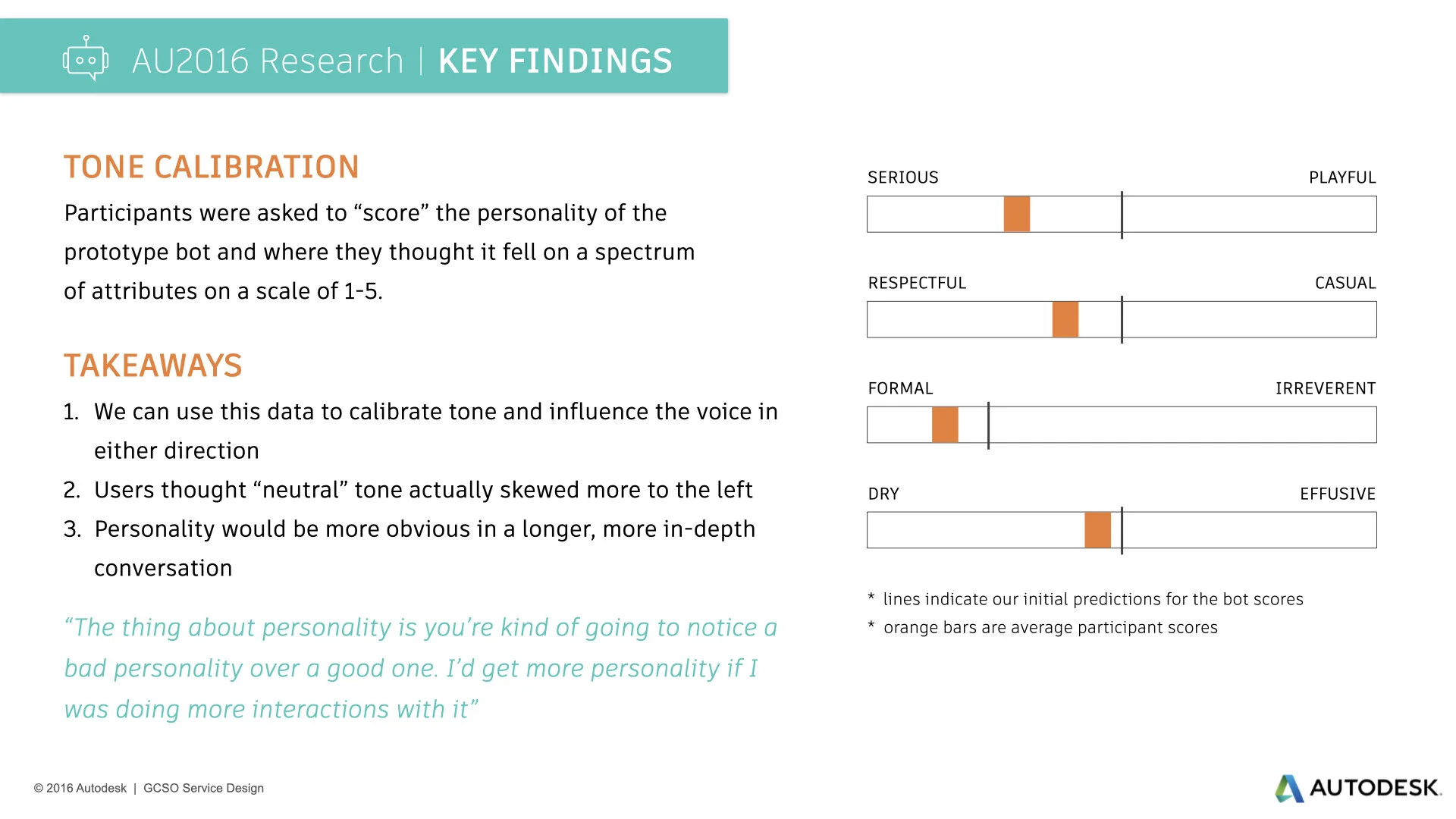

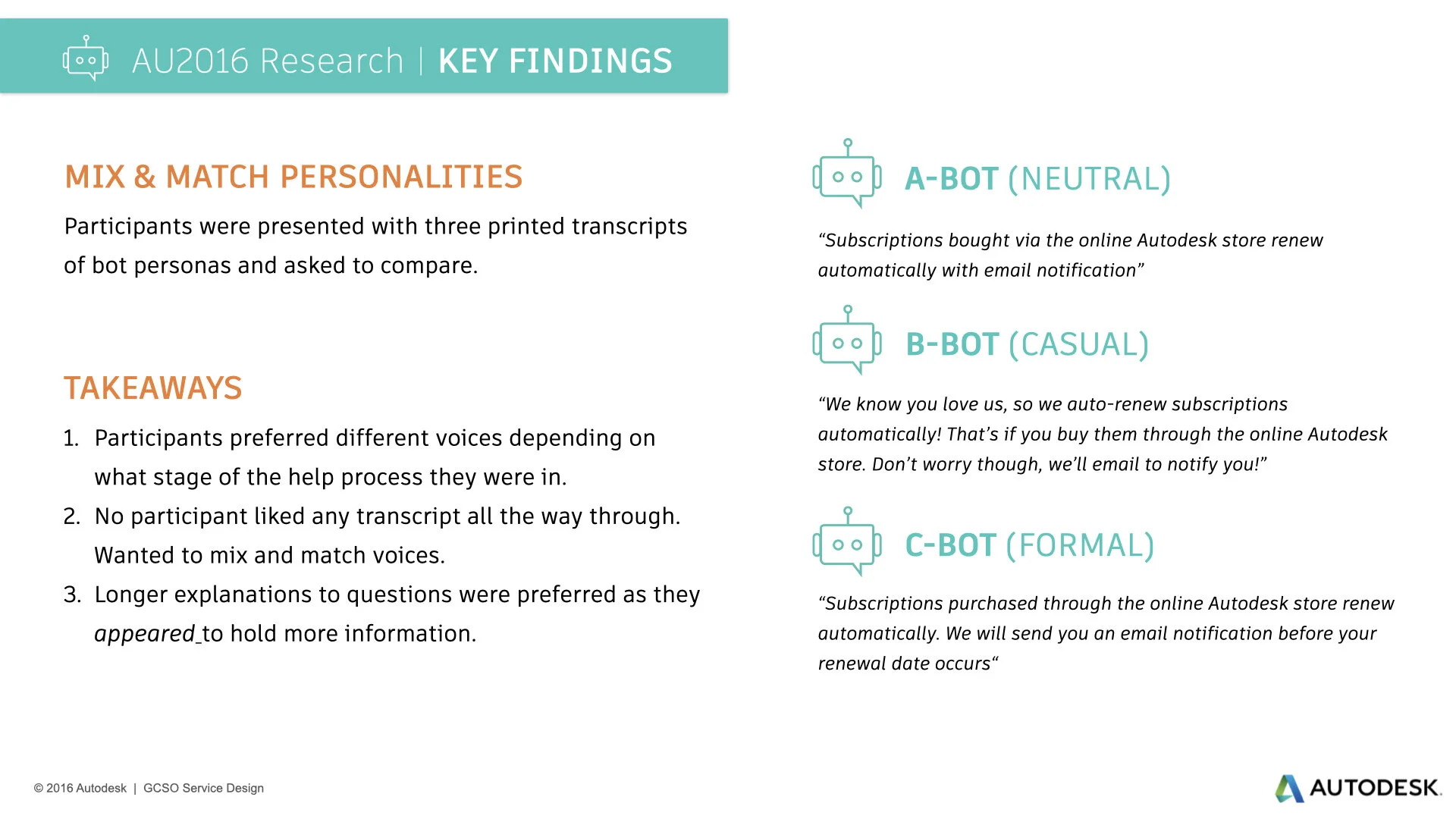

There were two things we were exploring through scripted conversations. The obvious one: which tone of voice do customers prefer for online support? But we also wanted to know if what we considered “formal,” “neutral,” and “casual” was the same as our customers.

04 Creating a Prototype

How do you prototype a conversation?

While not one of our key research questions, figuring out how to prototype an experience without an interface was one of the biggest challenges we faced. All digital chatbots have an interface, but our task was to figure out the conversation. Conversations can be one of our most frequent experiences, but they are not tangible. Conversations can be scripted, recorded, transcribed, but how is it prototyped? How is it tested?

There were no wireframes or comps to interact with, so my usual box of prototyping tools was zero help. Still, the final chatbot would be on a screen and customers would interact with it using their keyboard, so there were specific conditions we wanted to reflect:

Have a chat interface where users could freely type questions and read responses.

Mimic the response times and capabilities of a bot.

Be completely consistent from user to user for testing purposes.

We eliminated wireframes since they would only work with an exact script, not a natural conversation. We couldn’t use a human on the other end pretending to be a bot because a person’s response times would be inconsistent and there was a high probability of typos or other mistakes. To be completely consistent we needed to build an actual conversational AI and use a tool that allowed custom APIs.

In the end we decided to build a custom Slack integration using the same AI that would be used in the final product.

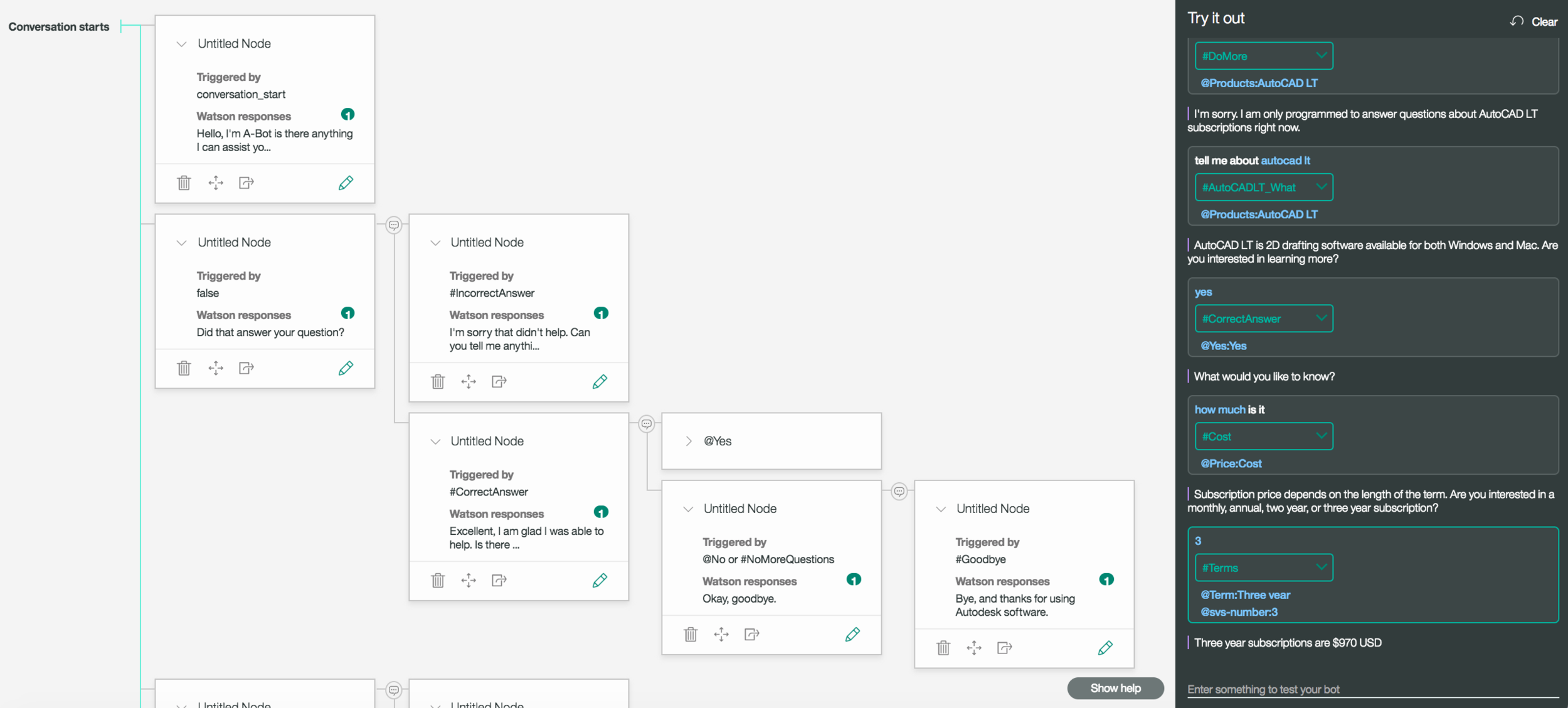

Building a conversational bot using IBM Watson BlueMix

Training a Bot

For each concept I wanted the bot to understand, I had to create a separate “Intent” and train it with different examples of that concept. I pulled quotes from chat transcripts between our customer and support agents and classified them into different Intents. We needed a data set of 30 or more examples for each Intent we wanted the bot to learn. I decided to keep things simple and only trained it on AutoCAD LT subscriptions, but even by ignoring fringe cases and focusing on a “happy path,” I ended up including over 50 Intents, each with its own data set for training.

Building the Logic

After the bot was trained on the different Intents it needed to identify, I built out the logic and structure to the conversation. These seven nodes illustrate the path that requires a simple “yes” or “no” answer from the user, but the structure behind it is more complicated. It was important to account for common divergent paths in a conversation and how a previous topic relates to a current or future topic.

Deploying the Bot in Slack

After completing the prototype, we deployed it to a testing environment.

In addition to the merits of using Slack that I already listed, Slack gave us all the ability to interact with the bot simultaneously. This made it easy for everyone to test and update the logic in an agile working session.

Considerations for the Future Build

I learned a lot about the mechanisms that power a chatbot’s interactions while building the prototype. In the final design we would need stopgap measures against users breaking the bot, becoming frustrated, confused, or losing the conversation thread. Users don’t always take the “happy path” we plan out and refining some of the bot mechanics could help prevent these issues.

Multiple Intents in a Single Input

Often people do not chat in the format of a single idea or Intent per input, they can try to explain the entire problem in a single input. so how do we deal with blocks of text that include multiple Intents combined into a paragraph?

Hierarchy of Nodes

Retrieval-based bots match user inputs against a list of Intents organized into a hierarchy. Watson functions by responding to the first node it matches in a list. Therefore, we must prioritize which nodes the AI looks at first when analyzing an input.

Setting Confidence Levels

We can set the confidence level needed before the AI responds to any Intent. It is easy to deploy a response when we know it is correct, but do we respond to inputs that score lower confidence levels? It could guess wrong.

Abandoned Tasks

Often a customer goes silent in the middle of a conversation or changes the topic. What will trigger the need to jump from one path to another? Should we end the current session and start over if the customer is silent above a specified time period?

05 Testing at AU2016

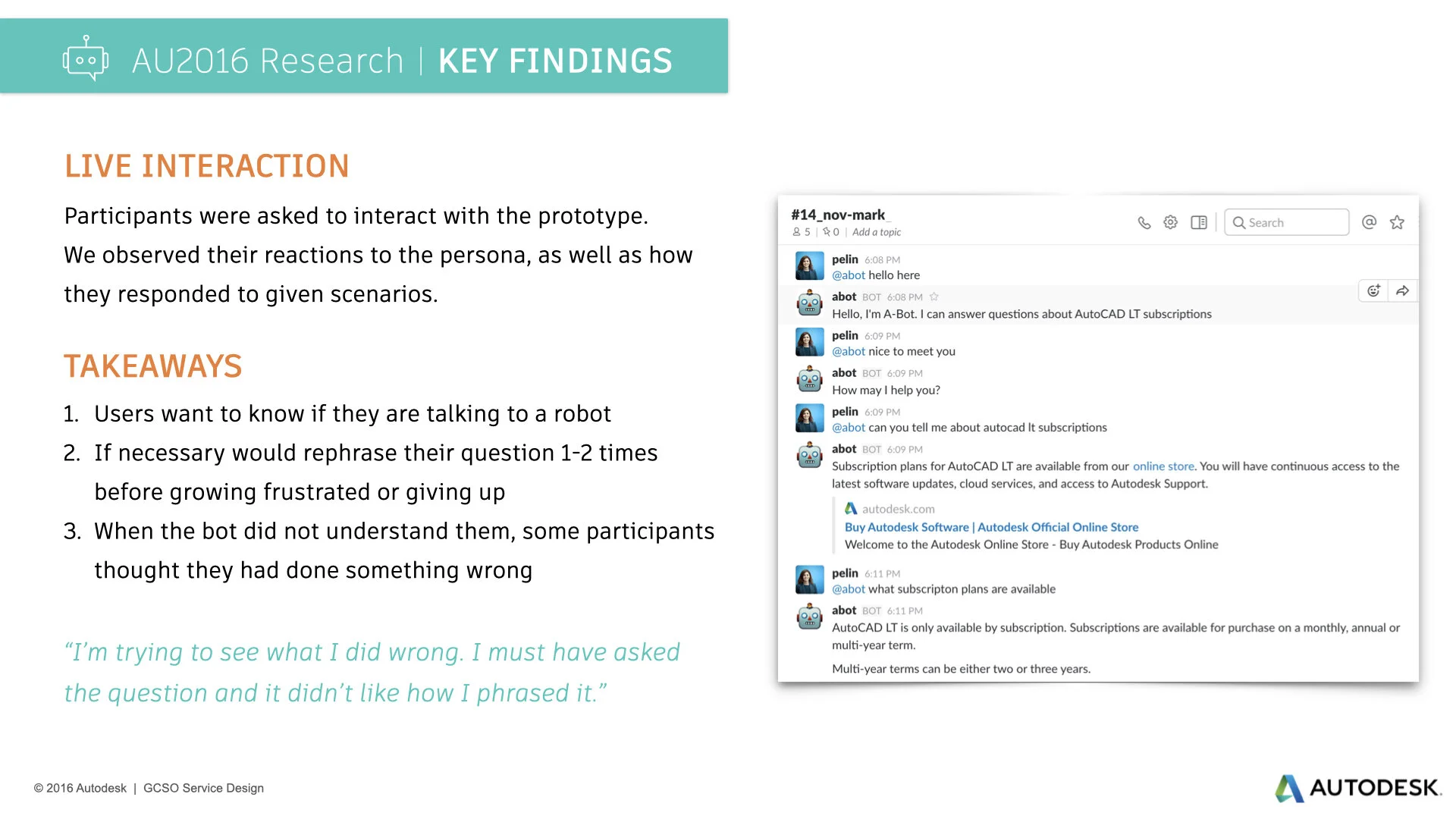

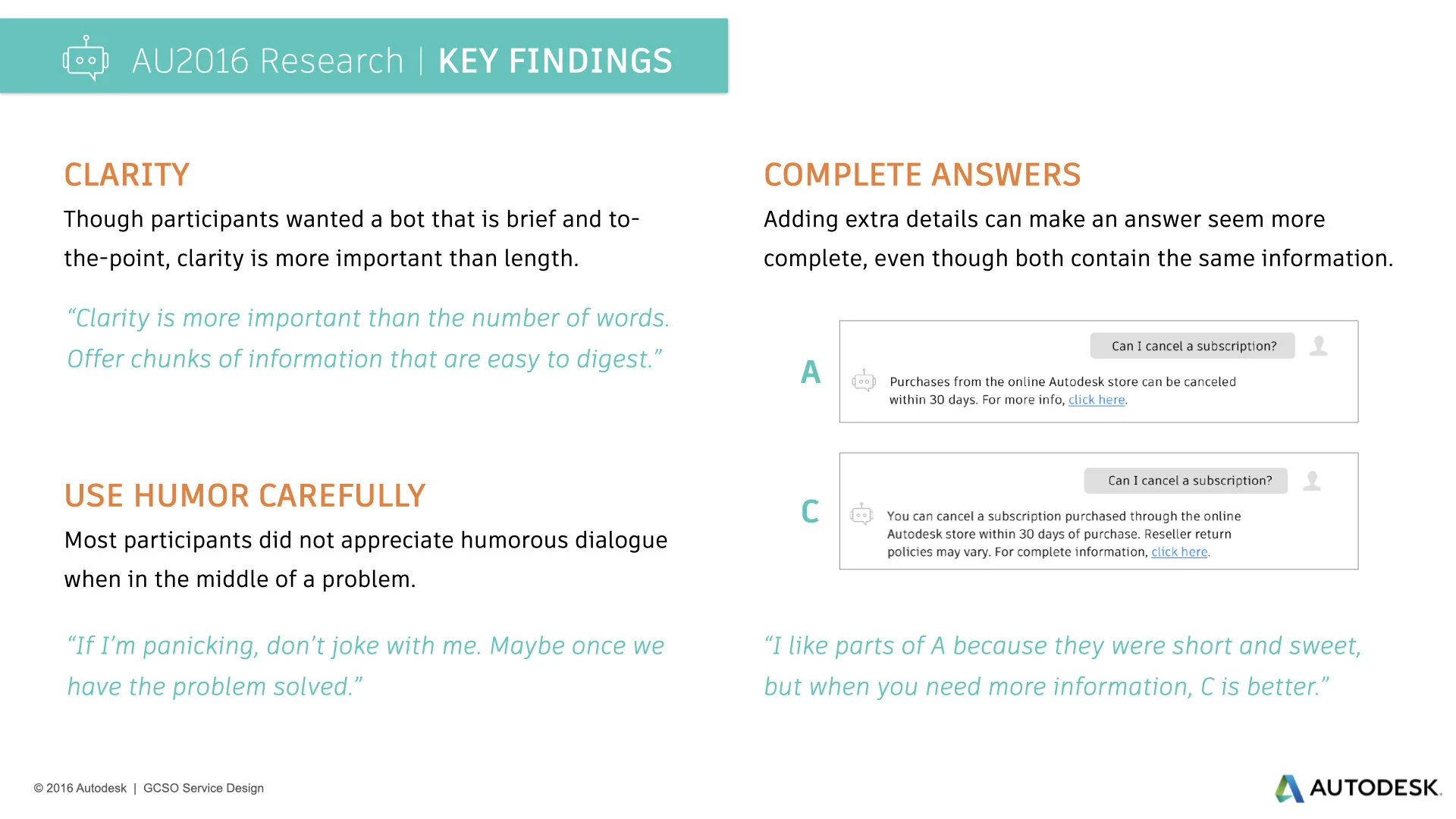

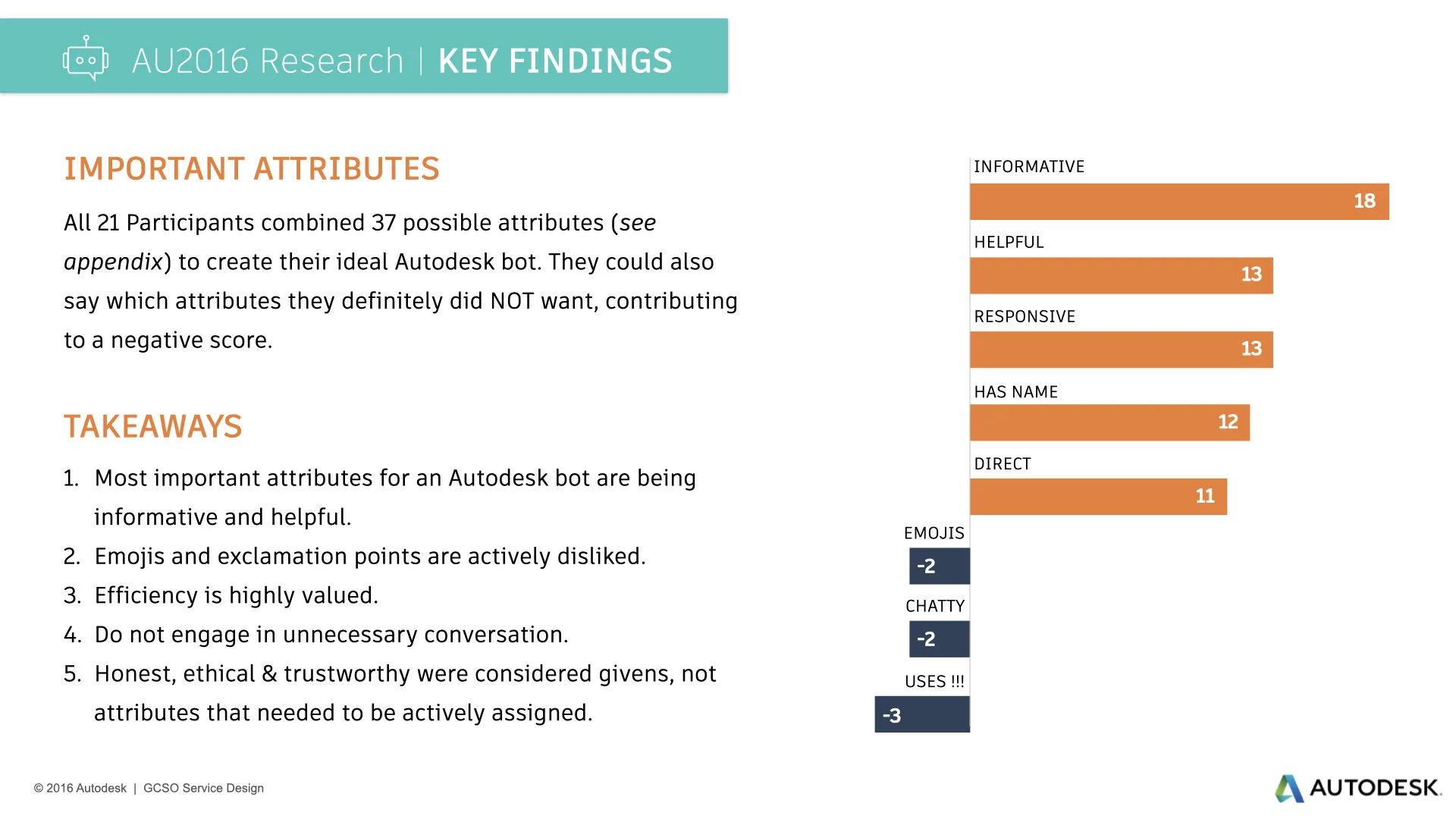

I created all of the assets used for the moderated testing conducted at Autodesk University 2016 with customers attending the conference. We used “think-aloud” techniques across three different exercises.

Evaluation of conversation with interactive prototype deployed in Slack. We recorded verbal comments, screen interactions, and provided a score card.

Choosing preferred tone of voice out of different scripted conversations, marking specific phrase they prefer out of the three options

Card sort choosing most important attributes for a chatbot to exhibit

06 Final Recommendations

Some findings & recommendations omitted due to confidentiality

Tell users it’s a Bot

Users will make more allowances for a bot than a human. Also, pretending a bot is human is tantamount to tricking the user and can make them feel duped.

Do not give the bot quintessentially human traits such as long typing delays, bad grammar, or misspellings.

Bot messaging should clearly indicate it is not human, but this does not preclude it from having a personality.

Avoid the Uncanny Valley with avatar and speech patterns. A response time less than 1 second was unsettling for users.

Outline capabilities

Tell users what the bot is able to accomplish. Knowing bot capabilities could make the user more understanding of errors that occur outside the bot’s wheelhouse.

Have an identity

A title makes the bot more identifiable, but it doesn’t necessarily need to take on the identity or persona of a human.

Use context and situational responses

Based on user’s word choices and vocabulary we can understand their attitude and severity of the problem.

By using conditional responses based on specific trigger words, we can address the same subject matter in different ways to mollify the user.

Viewing the current point of conversation in the context of previous lines allows the bot to appear less repetitive and more intelligent.

Longer responses in answer to important questions makes the user more confident of the information given. Quick responses are fine for unimportant dialogue.

Variation and duplicate responses

Don’t parrot back what the user typed when we are sure of intent, and don’t give the same answer multiple times. If necessary, users will rephrase their question 1-2 times if the bot asks them to, but then want to talk to a person.

Be careful apologizing

Use apologies sparingly. Too many or overly verbose apologies annoyed some users. No one expected the bot to be perfect, so it seemed unnecessary.

Users want to be understood

Language and punctuation when typing is different than everyday conversation. The AI needs to be capable of interpreting common chat speak and identifying the important words in blocks of text.

Bot should understand acronyms (related to Autodesk), abbreviations, unusual capitalizations, and common misspellings.

Understand what they are typing, whether or not it is correct spelling/punctuation.

Recognize the problem they are having and match it with the correct solution the first time.

Recognize when a bot can’t help

Recognize which problems are beyond a bot’s abilities and get them connected to the right person to help. The less back-and-forth before this happens the better.

Minimize frustrations

Most frustration for users stemmed from not being understood. Frustrations were lower if intent was identified correctly, even if the bot was unable to help. If the bot doesn’t understand, transfer the user to a person while incurring minimal ire.

Don’t waste their time

Autodesk customers are not chatting with our bot for entertainment. They have work to do and are coming to us because something is wrong, or they have an important question that needs an answer.

Our customers value efficiency, respect that.

Give them valuable information and show them where they can find out more.

Do not get stuck in conversation loops. If the bot displays repetitive answers, there is a high probability that the bot is misunderstanding the issue.

Get users to the right page/person the first time. Multiple transfers can be extremely frustrating to users, as is making customers repeat themselves after a transfer.

Use sparingly

Bots are not great at long discursive conversations. Interactions should be short and precise, and conversations should be a simple back and forth.

Don’t play hide and seek

We determined the bot could be universally useful on all of our web properties and that it didn’t make sense to have a chatbot available some places and not others, possibly forcing a customer to go look for it. A customer that is lost or needs to complete a specific task should be able to depend on a chatbot that is always located in the same place on every page.

Give specific options or feedback

It’s impossible to program a bot to address all potential conversation topics and the more alleyways a conversation can go down, the greater the possibility for dead ends.

Show options when there is a limited set to choose from, don’t make users guess the correct input.

Give clear selections, like yes and no buttons rather than making the user engage in unnecessary typing.

Keep the user flow going by giving instructions and progress updates where necessary.

Use forms for structured inputs

Avoid long back-and-forth conversations by using forms. This sidesteps the complications of parsing unpredictable plain text inputs. Validate inputs to avoid submission errors.

Stay in the interface, but give an exit strategy

Users preferred completing transactions within the chat interface vs. going to a different page. However, this isn’t always the case. Provide options for contacting a human, and give them the ability to “make this robot thing go away” by minimizing bot UI when not needed.

07 Conclusion

Unlike a design project, this research had no final deliverables beyond a prototype and recommendations on how and where a chatbot should be deployed. We accomplished our overall goal, proving customers would be comfortable chatting with a help bot. Through the testing conducted at AU, we came away with some valuable insight into the Do’s and Don’ts of building a chatbot.

The current version of AVA is not quite the free input chat-based bot that we had initially envisioned, but the sophistication of a bot is based on the libraries and API’s behind it. AVA is currently good at about 6 different things, therefore a heavily guided conversation is preferable. Bots with limited recognition skills that invite unguided conversations are looking at a high error rate. Therefore, the current experience is more akin to a “Choose Your Own Adventure” than a true conversation.

Overall, this project provided our Machine-Assisted Engagement team with valuable insights into our customers’ potential relationship with a chatbot, and where our main focus should be in building it. After our research was complete I moved into a consulting role on the project for the design and development phases.